Keeping an Eye on Alertness with Driver Monitoring Systems

Keeping an Eye on Alertness with Driver Monitoring Systems

Author: Udit Budhia, Senior Director, Automotive Product Marketing at indie Semiconductor

In-cabin monitoring is essential for enhancing safety, security, and the overall experience for vehicle occupants. When it comes to safety, vision-based monitoring plays a crucial role in helping to reduce potential accidents, improving emergency response, and complying with regulations in the evolving landscape of automotive technology. Specifically, vision-based systems improve vehicle safety through the detection of distracted driving and driver fatigue.

Distracted and drowsy driving have been a growing concern in recent years, due to the widespread use of smartphones and electronic devices and multitasking behavior. Further complicating the situation are inexperienced drivers, an aging population, and/or drivers who are not fully engaged or alert while behind the wheel. While public awareness campaigns and hands-free connectivity have contributed to reducing this risk, in-cabin monitoring is an important evolution for driver safety and automated assistance. In this blog, we explore these two scenarios and the ways in which indie is committed to the development of products that help address and improve safety features.

The Dangers of Driver Distraction

The danger of distracted driving is no secret. In 2021[i] it was reported that distracted driving claimed over 3,500 lives in the U.S. alone. In response to this, modern vehicles are increasingly equipped with advanced driver safety and automated features, such as adaptive cruise control (ACC), and steering assistance to reduce the workload of the driver and improve driver safety. As these systems continue to evolve and improve, their adoption in vehicles is rising. However, despite these safety features, automotive OEMs expect and count on the human driver to remain engaged and in control of the vehicle to avoid accidents or injury. Interestingly, it is the success of these driver safety systems that pose an unexpected challenge to the car manufacturer.

Driver’s face “automation complacency” where they trust the vehicle’s safety system to perform and make decisions and, as a result, become disengaged from driving the vehicle. A driver may start to feel comfortable multitasking, interacting with their phone, eating, or drinking, or simply looking out the window. Worse, there have been noted cases of deliberate misuse and abuse of driving assistance functions. A quick search on the web will yield online videos of drivers reading books, playing games, watching videos, or even jumping into another seat in the vehicle and assuming the vehicle is able to drive itself under all conditions.

The Dangers of Driver Fatigue

In addition to driver distraction, there’s the prevalence of driver fatigue. Our lifestyles, work hours, health issues, long commutes, and other daily pressures often take their toll – simply put, people are tired. This doesn’t always mix well with driving. People’s responsiveness when tired can be impacted, leading to slower reaction times or poor decision-making, which can lead to accidents.

According to the U.S. National Highway Traffic Safety Administration (NHTSA)[ii], “in 2017, 91,000 police-reported crashes involved drowsy drivers. These crashes led to an estimated 50,000 people injured and nearly 800 deaths. But there is broad agreement across the traffic safety, sleep science, and public health communities that this is an underestimate of the impact of drowsy driving.” NHTSA estimates that the cost of driver fatigue to society is over $109 billion dollars annually, not including property damage.

Driver Management Systems

To address these issues, OEMs have introduced camera-based and radar-based driver monitoring systems (DMS), and in some countries, they are required in new cars.

Cameras inside the cabin, whether behind the steering wheel, under the rearview mirror, or above an infotainment console, coupled with machine learning algorithms, detect when a driver is distracted or drowsy. During the day, these cameras see the driver’s face in the visible spectrum. When conditions are dark, such as during poor weather or at night, the driver’s face is illuminated with an infrared (IR) light that the driver cannot see, but the camera sensor still does. The ability of this camera to see both the visible and IR spectrum with a single sensor is unique and different than camera sensors found in a cell phone or a traditional camera. As a leader in automated driver and safety solutions, indie Semiconductor plays an integral role in enabling these cameras to view both the infrared and visible spectrum with a single sensor, so that the system can work accurately and consistently both in the night and during the day.

These sophisticated cameras enable the DMS to detect a drowsy or distracted driver and trigger a visual, audible or haptic (e.g. vibrating seat) cue to the driver to bring their attention back to focus.

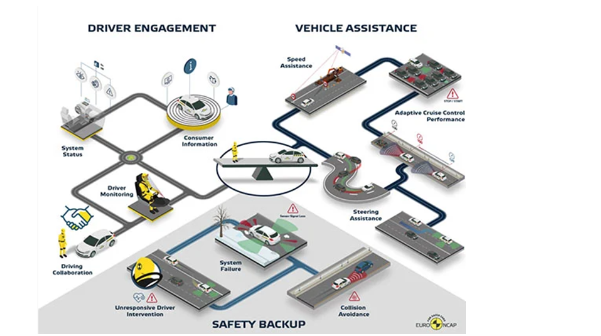

Euro NCAP

Recognizing the role of driver fatigue and distraction in traffic accidents, the European New Car Assessment Program (Euro NCAP) rating system considers DMS systems in the safety scores that it gives to vehicles. In fact, it considers DMS systems to be equal in importance to vehicle assistance features such as adaptive cruise control, steering assistance, and speed assistance, which also help drivers avoid accidents.

As the safety score is critical to the value of a car and the cost of insurance for that vehicle, car manufacturers are rapidly deploying DMS in the market. Similar trends and initiatives are taking place in the rest of the world, either through regulation or through safety organizations.

indie’s Advanced Camera Video Processor System-on-Chip

indie’s unique proprietary image sensor processor technology has been critical in successfully processing both the visible spectrum – using a blend of red, green, and blue (RGB) wavelengths captured by the camera sensor – and the infrared (IR) spectrum; this combination is referred to as RGB IR processing.

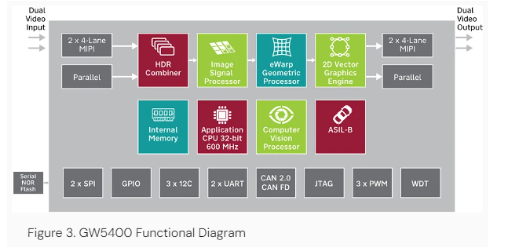

RGB-IR processing is one of the hallmarks of indie’s GW5400 advanced camera video processor system-on-chip (SoC). This device removes the IR data during the day, so that only a clean RGB image remains; if this did not happen, the image would be corrupted by the IR data from the sensor (Figure 2).

At night, when the IR image is needed and there is little to see in the visible spectrum, the GW5400 creates a “high resolution” IR image by intelligently replacing the RGB information with IR information. Without this capability, the image would have one-quarter the resolution, since IR is only one of the four pixels in the color filter array (CFA) of the sensor (learn more about CFAs in our blog, “Improving Driver Safety with Advanced Signal Processing and Color Filter Arrays”.).

GW5400 (shown in figure 3) is designed into a large number of driver management systems with major car manufacturers and their Tier-1 suppliers because of its exceptional and unique ability to process both the visible RGB and infrared IR spectrum, enabling cameras to detect the driver both during the day and night.

GW5400 also supports high dynamic range (HDR) sensors that enable cameras to see in both bright environments without getting washed out, as well as details within shadows. This is critical in vehicle applications, where the brightness inside the cabin is often significantly lower than outside of the vehicle. HDR improves the ability to see details within the vehicle regardless of the brightness that may be coming from sunlight outside of the vehicle. This improves DMS performance as well as improves other camera applications inside of the cabin, such as seatbelt detection.

Car manufacturers are also moving to higher resolution five-megapixel RGB-IR sensors. These higher resolution sensors allow a single camera, rather than two, to monitor both the driver and the rest of the cabin; by monitoring the whole in-cabin environment, the system can detect whether a child has been left in the vehicle or whether a passenger is not properly wearing their seatbelt. GW5400 works with a five-megapixel RGB-IR sensor, thereby reducing one of the required cameras in the vehicle and the overall safety system cost. GW5400 also meets automotive standards for quality (AEC Q100 Grade 2)

and safety (ASIL-B).

Conclusion

Driven by a combination of legislation, industry initiatives and consumer expectations, safety for both vehicle occupants and other road users has risen to the top of the automakers’ agenda. Alongside features such as adaptive cruise control (ACC), speed-assist, and steering assistance, in-cabin monitoring will play an increasingly important role in improving safety. Delivering effective and reliable DMS and Occupant Management System (OMS) solutions demands advanced SoC semiconductor technologies that provide efficient, real-time processing of data from in-cabin camera and radar systems in all types of lighting conditions. With devices such as the GW5400 camera video processor, indie is addressing this demand with automotive-qualified devices that simplify and speed DMS/OMS system design and implementation and that are future-proofed for new applications built around higher resolution cameras.

Author: Udit Budhia, Senior Director, Automotive Product Marketing at indie Semiconductor